Introduction to Nodes

Overview

Teaching: 45 min

Exercises: 0 minQuestions

What is an OTN-style Database Node?

What is expected from a Node Manager?

Who is this training for?

What is the schedule for the next several days?

Objectives

Understand the relationship between OTN and its Nodes.

Ensure attendees have required background information.

What is a Node?

OTN partners with regional acoustic telemetry networks around the world to enable detection-matching across our communities. An OTN Node is an exact copy of OTN’s acoustic telemetry database structure, which allows for direct cross-referencing between the data holdings of each regional telemetry sharing community. The list of OTN Nodes is available here: https://members.oceantrack.org. Data only needs to be reported to one Node in order for tags/detections to be matched across all.

How does a Node benefit its users?

OTN and affiliated networks provide automated cross-referencing of your detection data with other tags in the system to help resolve “mystery detections” and provide detection data to taggers in other regions. OTN Data Managers perform extensive quality control on submitted metadata to ensure the most accurate records possible are stored in the database and shared with researchers. OTN’s database and Data Portal website are well-suited for archiving datasets for future use and sharing with collaborators. The OTN system and data workflows include pathways to publish datasets with the Ocean Biodiversity Information System, and for sharing via open data portals such as ERDDAP and GeoServer. The data product returned by OTN is directly ingestible by populare acoustic telemetry data analysis packages including glatos, actel, remora, and resonATe. In addition to the data curation materials, OTN offers continuous support and workshop materials detailing the use of these packages and tools.

Below is a link to a presentation from current Node Managers, describing the relationship between OTN and its Nodes, the benefits of the Node system as a community outgrows more organic person-to-person sharing, as well as a realistic understanding of the work involved in hosting/maintaining a Node.

Node Managers

To date, the greatest successes in organizing telemetry communities has come from identifying and working with local on-the-ground Node Managers for each affiliated Node. The trusted and connected ‘data wranglers’ have been essential to building and maintaining the culture and sense of trust in each telemetry group.

In order to be successful as a Node Manager in your region, here are a few tips and guidelines:

- Ensure you set aside time each week to wear your ‘Node Manager hat’.

- Familiarize yourself with the metadata reporting templates, and follow carefully the formats required for each variable.

- Ensure you have continuous communication with the OTN data team so you are able to align your detection matching with all other Nodes, and keep your local toolbox up to date.

- Ensure you have consistent communication with your local telemetry community - knowing who’s who is important for relationship building and growing the community.

- Be willing to learn, and ask questions - we are always trying to improve our tools and processes!

No previous coding or data management experience is required to manage a Node. Anyone who is willing to put in the work to become a Data Manager can be successful. Being involved in the telemetry community as a researcher (or affiliate) is enough to get you started with ‘data wrangling’ for your telemetry community.

Node Training

Each year OTN hosts a training session for Node Managers. This session is not only for new Node Managers, but also a refresher for current Node Managers on our updated tools and processes.

This is a hands-on course, participants will be using the tools to practice loading telemetry data with us, usually using a Training Node we have built for this purpose. This means you will need to install all required software and devote full attention for the next several days.

Here are the general topics that will be covered:

- OTN Node structure and database maintenance

- Data loading workflow: from metadata to detection extracts, and how we track progress in GitLab

- Practice interfacing with an OTN Node database in DBeaver, using SQL scripts

- Practice using OTN’s Nodebooks to quality control, process and verify records, using python and Jupyter notebooks

- Overview of OTN’s Data Push process, and how Node Managers are involved

- Data Policy guidelines as a Node Manager

If you do not intend on learning how to load data to an OTN-style Node (and would prefer instead to be a spectator) please let us know, so we can identify who our hands-on learners will be.

A great resource for Node Managers as they get started will be OTN’s FAQ page. Your local telemetry community will likely have many questions about the Node and how it works, and the FAQs can help answer some of these questions.

Key Points

Your Node is fully compatible with all others like it.

A well-connected Node Manager is essential to serving the research community.

OTN staff are always availble to support Node Managers.

Upholding the Data Policy

Overview

Teaching: 15 min

Exercises: 0 minQuestions

How do I handle data requests?

Objectives

Understand how to uphold the Network’s Data Policy.

As an Node Data Manager, you will have the key responsibility to uphold your Network’s Data Privacy Policy and/or Data Sharing Agreement. You have access to every member’s public and private datasets, a level of access that not even your direct supervisors will have.

For this reason, you need to be intimately familiar with the types of data which are under embargo/private, and what is publicly available according to the Data Policy / Data Sharing Agreement for your Network. You will need to develop an internal protocol for when requests for data access are submitted, to ensure appropriate care is taken to protect the integrity of your members’ data.

OTN recommends creating a “Data Request Response Policy”.

External Requests - Restricted Data

This is an example of how OTN handles these requests:

- Request for data (from person other than data owner) submitted

- Data request is scoped, in a GitLab Issue. All details from requester is included.

- Impacted PIs are identified, and contacted, seeking written permission for requester to access the information.

- Written permission is documented in GitLab Issue, to preserve the paper-trail.

- Data request report is compiled and provided to requester, once all permissions have been received.

Internal Requests

Internal requests, from other Network staff, need to be handled in a similar way. While all Network staff should be familiar with the Data Policy, this may not be the case. It is the Node Manager’s responsibility to ensure that information from the database is not shared outside of internal reports. We need to track requests from all sources, for OTN this even includes the OTN Glider and Field teams, so we can have a record of who has asked for what, and be able to enforce the appropriate Data Policies.

Partner-Node Data Policies

In order to meet everyone’s expectations when it comes to data sharing and maximize the utility of tags releeased, there is one shared rule across all participating Node data policies and data sharing agreements. All nodes and OTN agree that the Data Policy of the Network which holds the tag records will apply to the detections of those tags, in all of the other Nodes in which it is detected. You cannot share information/detections of tags from outside your Node, without first consulting with your partner’s Data Policy. This will likely not be an issue for most Nodes, but is a key consideration for OTN staff when creating data products.

For guidance on the interpretation of your Data Policy, or the Data Policy of a parter Node, OTN, and especially the Director of Data Operations, is available to assist.

Key Points

Node Managers have a key responsbility to ensure the Data Policy is followed.

OTN System, Structure and Outputs

Overview

Teaching: 25 min

Exercises: 0 minQuestions

What does an OTN-style Database look like?

What is the general path data takes through the OTN data system

What does the OTN data system output?

Objectives

Understand the OTN Database structure on a high level

Understand the general path of data of data through the OTN system

Understand what the OTN system can output

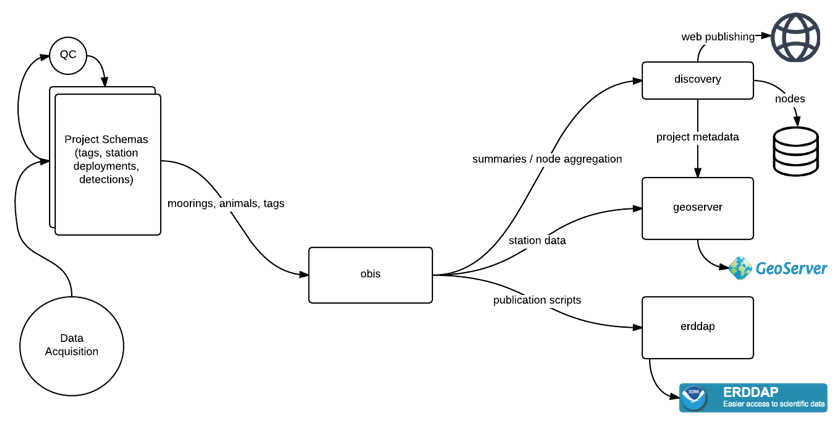

OTN Data System

The OTN data system is an aggregator of telemetry data made up of interconnected Node Databases and data processing tools. These work together to connect researchers with relevant and reliable data. At the heart of this system are Nodes and their OTN-style Databases.

Affiliated acoustic telemetry partner Networks may become an OTN Node by deploying their own database that follows the same structure as all the others. This structure allows Nodes to use OTN’s data loading processes, produce OTN data products, and match detections across other Nodes.

Basic Structure

The basic structural decision at the centre of an OTN-style Database is that each of a Node’s projects will be subdivided into their own database schemas. These schemas contain only the relevant tables and data to that project. The tables included in each schema are created and updated based on which types of data each project is reporting.

Projects can have the type tracker, deployment, or data.

- Tracker projects only submit data about tag releases and animals. They get tables based on the tags, animals, and detections of those tags.

- Deployment projects only submit data about receivers and their collected data. These projects get tables related to receiver deployments and detections on their receivers.

- Data projects are projects that deploy both tags and receivers and will submit data related tags, animals, receivers, and detections and will get all the related tables.

In addition to the project-specific schemas, there are some important common schemas in the Database that Node Managers will interact with. These additional schemas include the obis, erddap, geoserver, vendor, and discovery schemas. These schemas are found across all Nodes and are used to create important end-products and for processing.

- The

obisschema holds summary data describing each project contained in the Node as well as the aggregated data from those projects. When data goes into a final table of the project schema it will be inherited into a table inobis(generally with a similar name). - The

erddapschema holds aggregated data re-formatted to be used to serve telemetry data via an ERDDAP data portal. - The

geoserverschema holds aggregated data re-formatted to be used to create geospatial data products published to a GeoServer. - The

vendorschema holds manufacturer specifications for tags and receivers, used for quality control purposes. - The

discoveryschema holds summaries of data across the OTN ecosystem. These tables are used to create summary reports and populate statistics and maps on partner webpages.

The amount of information shared through the discovery tables can be adjusted based on sharing and reporting requirements for each Node.

The Path of Data

The OTN data system takes 4 types of data/metadata: project, tag, instrument deployments, and detections. Most data has a similar flow through the OTN system even though each type has different notebooks and processes for loading. The exception to this is project metadata which has a more unique journey because it is completely user-defined, and must be used to initially define and create a project’s schema.

flowchart BT

tag_start(( )) --> tag_sheet[[tagging metadata sheets]]

style tag_start fill:#00FF00,stroke:#00FF00,stroke-width:4px

tag_sheet --> tag_raw(c_tag_meta_suffix)

style tag_raw fill:#6495ED

tag_raw --> animcache(animalcache_suffix)

style animcache fill:#CF9FFF

tag_raw --> tagcache(tagcache_suffix)

style tagcache fill:#CF9FFF

animcache --> otnanim(otn_animals)

style otnanim fill:#FDDA0D

tagcache --> otntra(otn_transmitters)

style otntra fill:#FDDA0D

otnanim --> obisanim(otn_animals)

style obisanim fill:#B8B8B8

otntra --> obismoor(moorings)

style obismoor fill:#B8B8B8

rcv_start(( )) --> rcv_sheet[[deployment metadata sheets]]

style rcv_start fill:#00FF00,stroke:#00FF00,stroke-width:4px

rcv_sheet --> rcv_raw(c_shortform_suffix)

style rcv_raw fill:#6495ED

rcv_raw --> stat(stations)

style stat fill:#CF9FFF

rcv_raw --> rcv(rcvr_locations)

style rcv fill:#CF9FFF

stat --> moor(moorings)

style moor fill:#FDDA0D

rcv --> moor

moor --> obismoor

det_start(( )) --> det_sheet[[detection instrument data]]

style det_start fill:#00FF00,stroke:#00FF00,stroke-width:4px

det_sheet --> event_raw(c_events_suffix)

style event_raw fill:#6495ED

event_raw --> events(events)

style events fill:#CF9FFF

events --> moor

det_sheet --> det_raw(c_detections_suffix)

style det_raw fill:#6495ED

det_raw --> det(detections_yyyy)

style det fill:#CF9FFF

det --> otndet(otn_detections_yyyy)

style otndet fill:#FDDA0D

otndet --> obisdet(otn_detections_yyyy)

style obisdet fill:#B8B8B8

obisanim --> obis[(Parent schema)]

style obis fill:#B8B8B8,stroke:#000000

obismoor --> obis

obisdet --> obis

obis --> done(( ))

style done fill:#FF0000,stroke:#FF0000

Project Data

Project data has a unique workflow from the other input data and metadata that flows into an OTN Node, it is generally the first bit of information received about a project, and will be used to create the new schema in the Database for a project. The type of project selected (tracker, deployment, or data) will determine the format of the tables in the newly created schema. The type of project will also impact the loading tools and processes that will be used later on. The general journey of project data is:

- To register a new project a researcher will fill out a project metadata template and submit it to the Node Manager.

- The Node Manager will visually evaluate the template to catch any obvious errors and then run the data through the OTN Nodebook responsible for creating and updating projects (

Create and Update Projects). - The

Create and Update Projectsnotebook will make a new schema in the Database for that project, and fill it with the required tables based on the type of project. - Summary tables are populated at this time (

scientificnames,contacts,otn_resourcesetc). - After this, OTN analysts will verify the project one last time to make sure every necessary field is filled out and properly defined.

Tag, Deployment and Detections Data

Even though tag, deployment, and detections data all have their own loading tools and processes, their general path through the database is the same.

- Their data workflows all begin with a submission of data or metadata files from a researcher.

- The Node Manager ensures there is a copy of the file on the Node’s document management website.

- The Node Manager carries out visual quality control to catch any obvious errors.

- The data is then processed through the relevant OTN Nodebooks. This process is outlined by the task list associated with the GitLab Issue made for this data.

- The data will first be loaded into the “raw” tables. This is the table that holds the raw data as submitted by the researcher (the naming convention for raw tables is that they always have the prefix

c_and will have a suffix indicating the date it was loaded, typicallyYYYY_MM). - After the raw data table is verified, the data will move to the “intermediate” tables which act as a staging area for partially-processed data.

- After the intermediate table is verified, data will move to the “upper” tables, where the data is finished processing and is in its final form. This is the data that will be used for aggregation tables such as

obisand for outputs such as Detection Extracts.

OTN Data Products

The OTN Database has specific data products available, based upon the clean processed data, for researchers to use for their scientific analysis.

In order to create meaningful Detection Extracts, OTN and affiliated Nodes only perform cross-matching events every 4 months (when a reasonable amount of new data has been processed). This event is called a synchronous Data Push. In a Data Push:

- All recently-loaded data is verified and updated.

- Detections are matched to their relevant tag across all Nodes (Cross-Node Matching).

- Once cross-node matching is done, Detection Extracts are created, containing all the new detections matches for each project. Detection Extract files are formatted for direct ingestion by analysis packages such as glatos and resonate.

- Summary schemas like

discovery,erddap, andgeoserverare updated with the newly verified data.

Summary schema records can be used to create maps and other record overviews such as this map of active OTN receivers:

Backing Up Your Data

As with any database, it is important to make sure the data held by the OTN Database Node is protected. To ensure you are protected your Database and potentially the files contributed to your Node by the research community you support should be backed up properly, in the event your primary Database crashes, is corrupted, is lost, or any other unexpected event.

You should discuss and coordinate a backup strategy with the groups or institutions responsible for your Database’s administration, and find out their policies and practices for performing backups. Backup strategies may vary from group to group but it is a good idea to make sure they are adequately backing up your data daily, if not multiple times a day, and keep copies of backups in different physical locations in the case that something happens at a single location.

OTN is happy to offer guidance and recommendations to any group.

Key Points

All OTN-style Databases have the same structure

Databases are divided into project schemas which get certain tables based on the type of data they collect

Data in the OTN system moves from the raw tables to the intermediate tables to the upper tables before aggregation

Setup and Installing Needed Software

Overview

Teaching: 10 min

Exercises: 50 minQuestions

What software does a Node Manager need?

Why do I need the recommended software?

How do I install the required software?

Objectives

Understand how to install required software and prepare to load data

Ensure attendees have the required software installed and are ready to use it

The NodeBook environment and supporting software

In order to work efficiently as a Node Manager, the following programs are necessary.

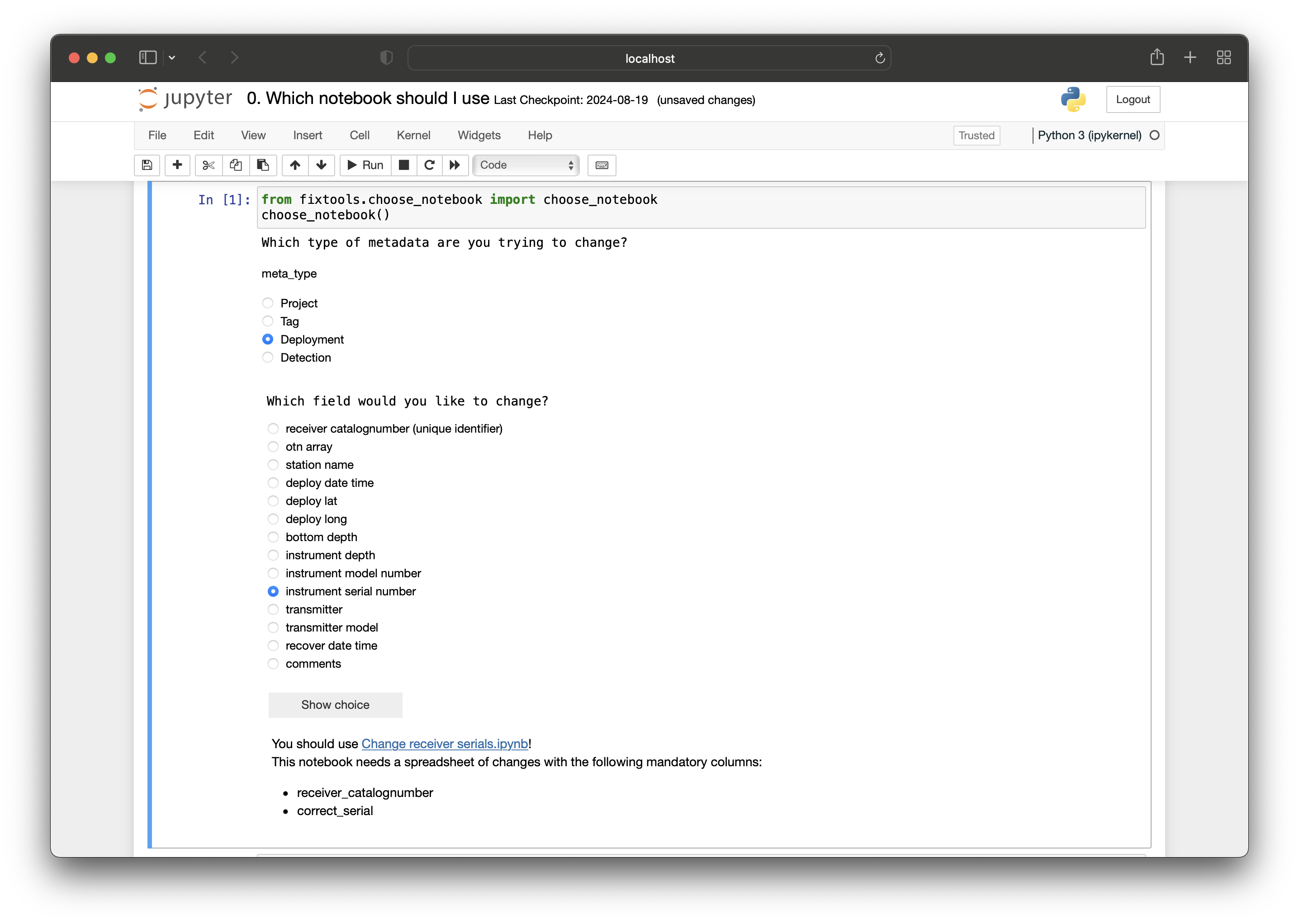

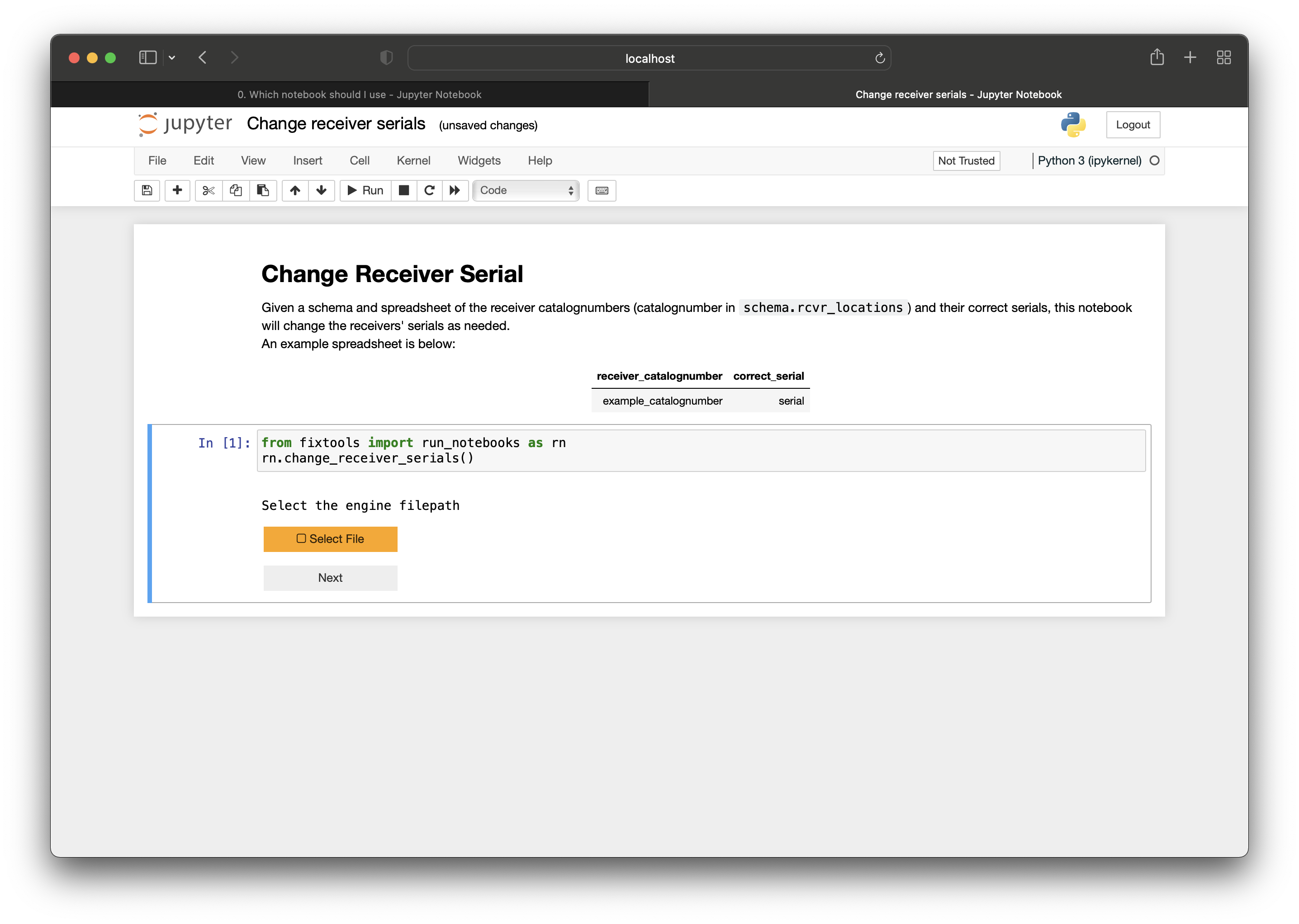

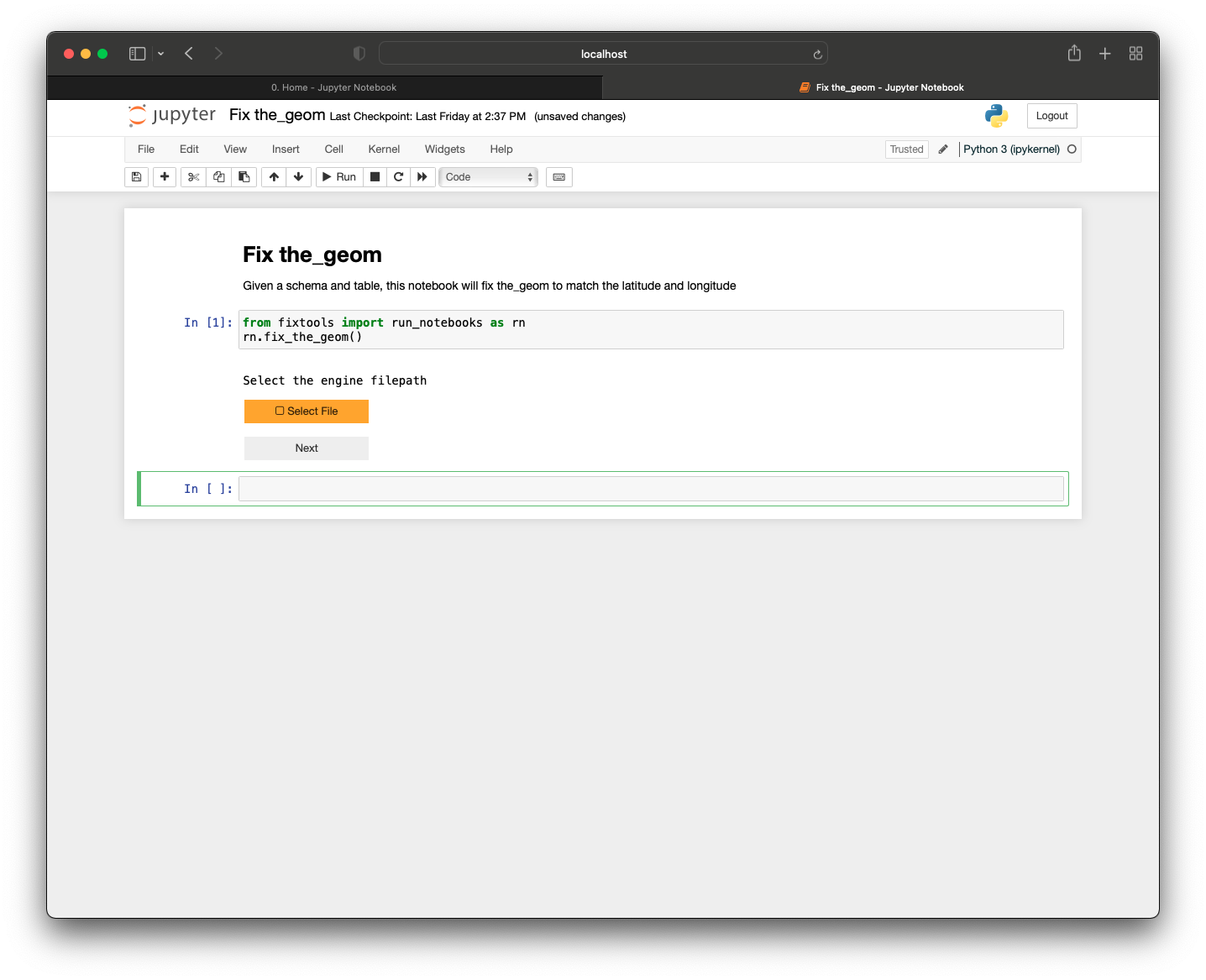

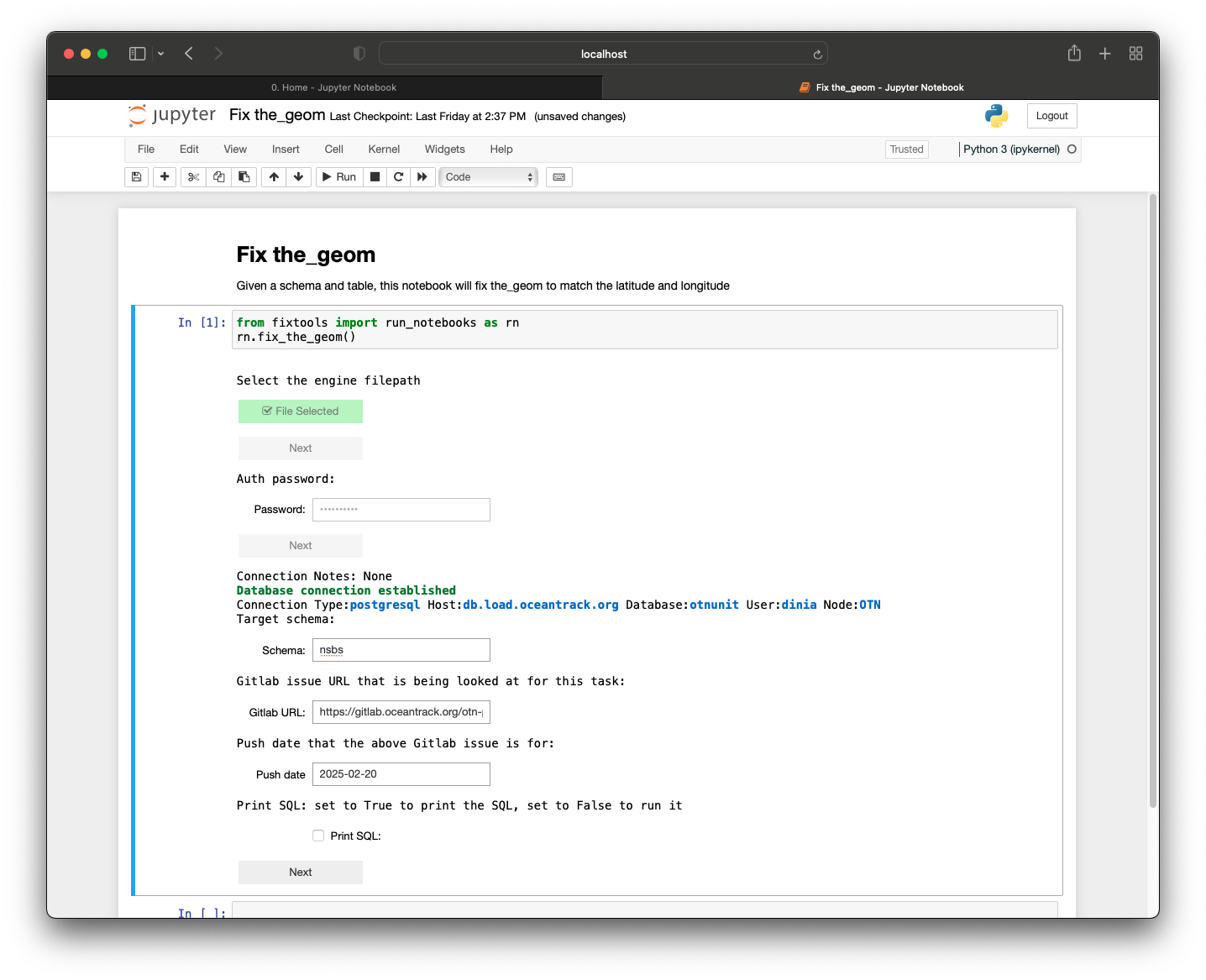

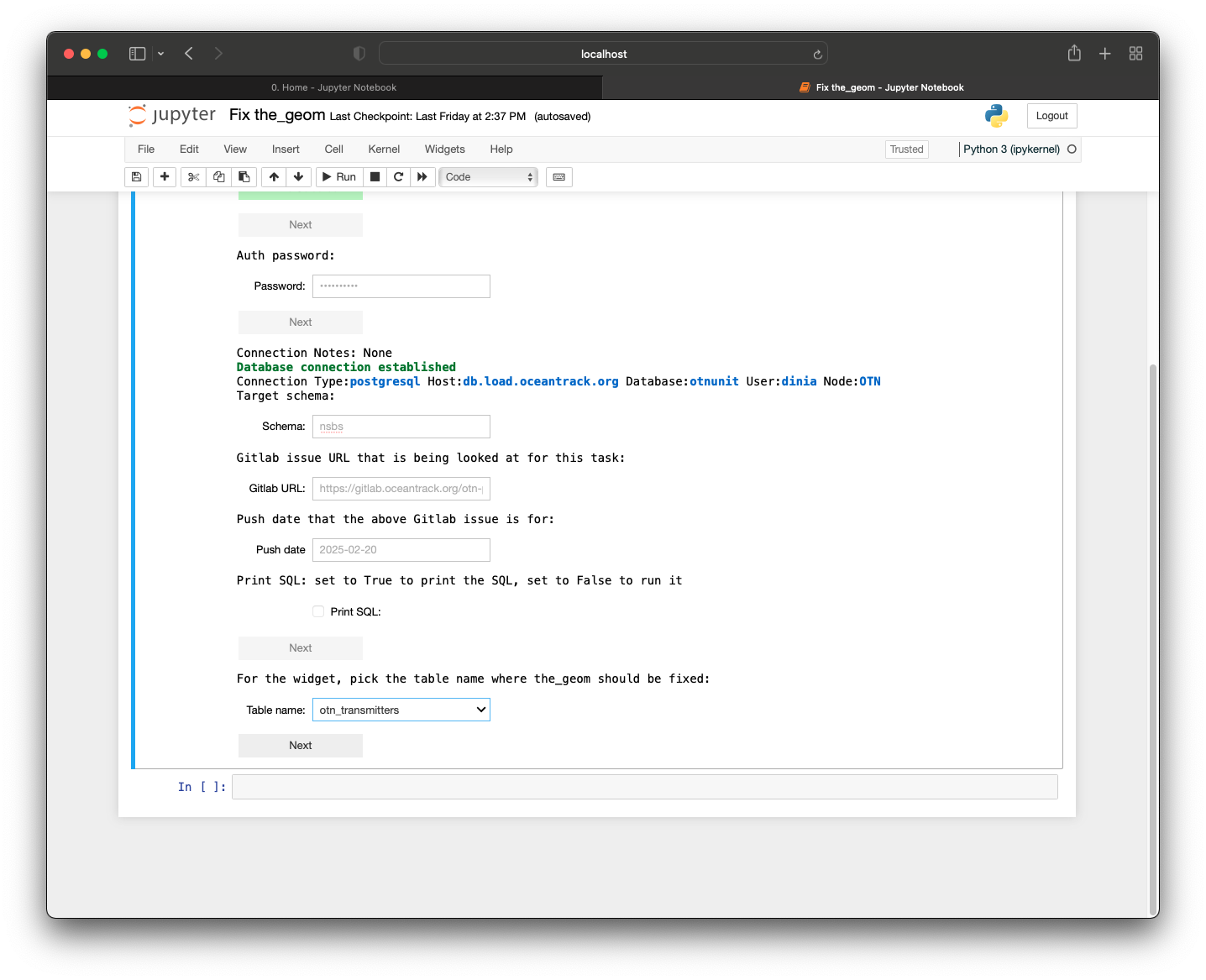

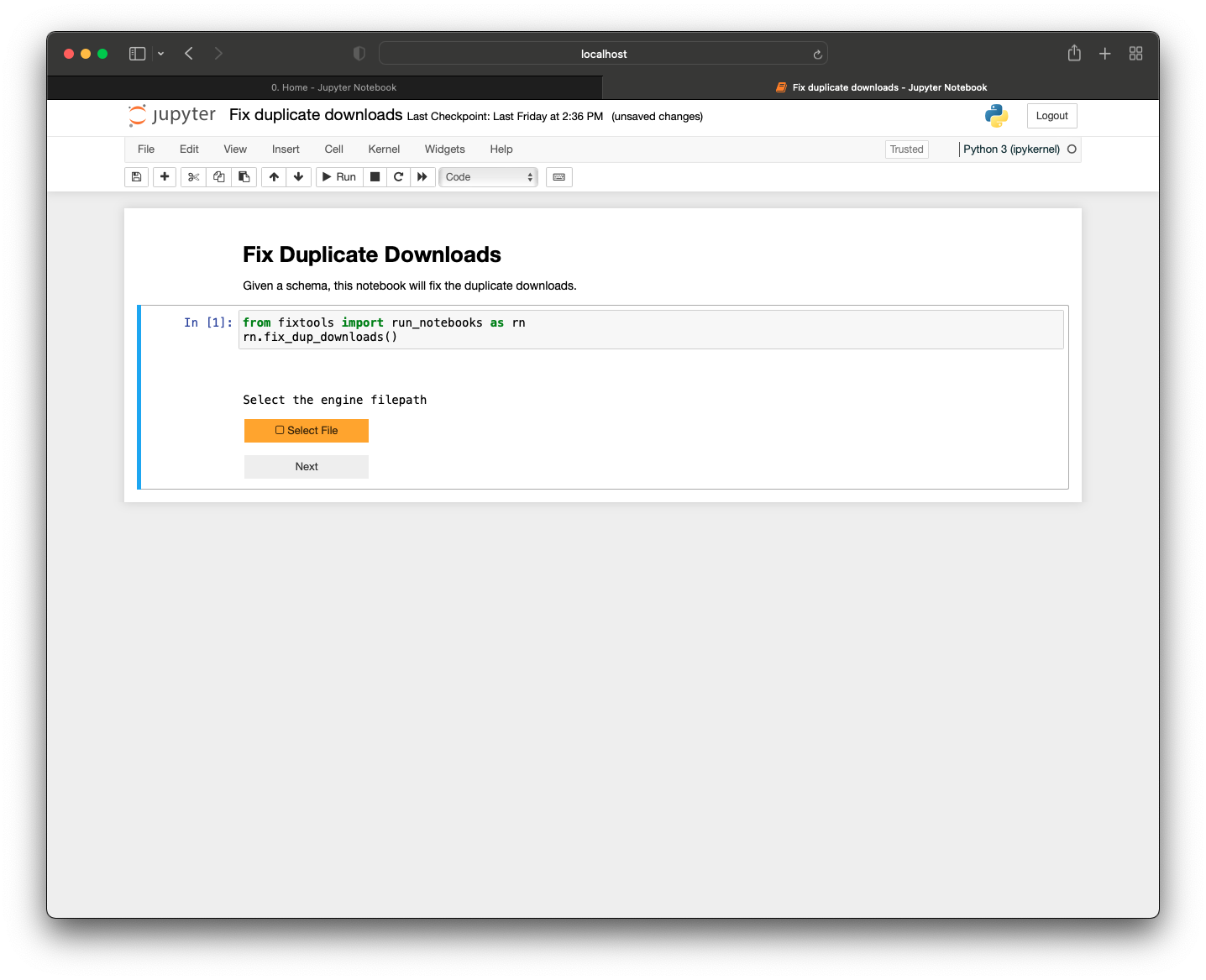

To standardize the verification and quality control process that all contributing data is subjected to, OTN has built custom quality control workflows and tools for Node Managers, often referred to as the OTN Nodebooks. The underlying functions are written in Python and workflows that rely on them can be undertaken through the use of Jupyter Notebooks. In order to use these tools, and interact with your database, you will need to install a Python environment and the software packages that support the workflows. Updates to these tools, as well as up-to-date installation instructions are always available on the OTN GitLab.

This lesson will give attendees a chance to install all the relevant software, under the supervision of OTN staff.

Python/Mamba

Python is a general-purpose programming language that has become the most popular language on GitHub and in many of the computational sciences. It is the main language used by OTN to standardize our data processing pipeline.

Mamba is a fast, cross-platform Python distribution and package manager. When you install Mamba (through Miniforge) you get a self-contained version of the Python interpreter (which enables your computer to run Python code), and many of the core Python libraries. Managing your Python installation with Mamba allows you to install and keep updated all the supporting packages needed for the Nodebooks with one command rather than having to install each one individually.

Miniforge Windows - https://conda-forge.org/miniforge/

- Select the option install for Just Me (recommended).

- Check the option to Add Miniforge3 to my PATH environment variable.

Miniforge Mac -

- Setup homebrew by running the command:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"- Note: this operation requires elevated privileges (sudo) - Use the commands provided by brew’s installation output to add brew to your system path

- Use brew to install miniforge:

brew install miniforge - Add miniforge to your zsh environment by typing conda init zsh. Restart the terminal

Miniforge Linux (Debian)

- Download the Shell script (.sh) file from https://conda-forge.org/miniforge/

- Recommended: Choose Python 64-bit Linux Installer

- Change the run permissions for the miniforge installer script. ie

chmod +x Miniforge3-[version]-Linux-x86_64.sh - Run

./Miniforge3-[version]-Linux-x86_64.shin the Linux terminal to activate the installer. - Add this conda installation to your terminal environment by running

conda init. Restart the terminal to see the changes reflected.

Git

Git is a version-control system for text, it helps people to work on code collaboratively, and maintains a complete history of all changes made to the files in a project. We use Git at OTN to track and disseminate changes to the Nodebooks that are made by our developer team, and occasionally you will need to use Git to update your Nodebooks and receive those changes.

Install Git

-

Windows- https://git-scm.com/download/win

-

Linux (Debian) - run the command:

sudo apt install git

Nodebooks - iPython Utilities

The ipython-utilities project contains the collection of Jupyter notebooks used to load data into the OTN data system.

Create an Account

First, you will need a GitLab account. Please fill out this signup form for an account on GitLab.

Then, OTN staff will give you access to the OTN-Partner-Nodes group, which hosts all of the relevant Projects for Node Managers.

Install iPython Utilities

- Determine the folder in which you wish to keep the iPython Utilities Nodebooks.

- Open your terminal or command prompt.

- Type

cdfollowed by a space. - You then need to get the filepath to the folder in which you wish to keep the iPython Utilities Nodebooks. You can either drag the folder into the terminal/command prompt OR right-click on the folder, select ‘Copy as Path’ from the dropdown menu, and paste the result into the terminal/command prompt.

- You should have a command that looks like

cd /path/to/desired/folder. - Press Enter, and your terminal/command prompt will navigate to the folder you provided.

- Type

- Create and activate the “nodebook” python enviornment. The creation process will only need to happen once.

- In your terminal, run the command

conda create -n nodebook python=3.9 - Activate the nodebook environment by running

conda activate nodebook

- In your terminal, run the command

- Next, run:

git clone https://gitlab.oceantrack.org/otn-partner-nodes/ipython-utilities.git. This will get the latest version iPython Utilities from our GitLab. - Navigate to the newly-created ipython-utilities subdirectory by running

cd ipython-utilities. - Switch to the

integrationbranch (which contains the most up-to-date code) by runninggit checkout integration. - Now to install all required python packages by running the following:

mamba env update -n nodebook -f environment.yml

To open and use the OTN Nodebooks:

- MAC/WINDOWS/LINUX: Open your terminal and navigate to your ipython-utilities directory by running

cd /path/to/ipython-utilities. Then, run the commands:conda activate nodebookto activate the nodebook python environmentjupyter notebook --config="nb_config.py" "0. Home.ipynb"to open the Nodebooks in a browser window.

- DO NOT CLOSE your terminal/CMD instance! This will need to remain open in the background in order for the Nodebooks to be operational.

More operating system-specific instructions and troubleshooting tips can be found at: https://gitlab.oceantrack.org/otn-partner-nodes/ipython-utilities/-/wikis/New-Install-of-Ipython-Utilities

Database Console Viewer

There are database administration applications to assist with interacting directly with your database. There are many options available but DBeaver and DataGrip are the most popular options at OTN.

- https://dbeaver.io/ (free and open access - recommended)

- https://www.jetbrains.com/datagrip (free institutional/student access options - another option)

In the next lesson we will practice using our database console viewer and connecting to our node_training database.

More Useful Programs

In order to work efficiently as a Node Manager, the following programs are necessary and/or useful.

Cross-Platform

Visual Studio Code - An advanced code editing integrated development environment (IDE). Also contains extensions that can run JuPyTeR notebooks, open CSV files in a visually appealing way, as well as handle updating your Git repositories.

For WINDOWS users

Path Copy Copy - For copying path links from your file browser. Since many of the notebooks require you to provide the path to the file you wish to load, being able to copy and paste the entire path at once can save a lot of time.

Notepad++ - For reading and editing code, csv files etc. without altering the formatting. Opening CSV files in Excel can change the formatting of the data in the file (this is a common problem with dates). Notepad++ will allow you to edit CSV files (and code, if necessary) without imposing additional formatting on data.

Tortoise Git - For managing git, avoiding command line. Depending on what new features have been recently added, you may be asked to use a different branch of the notebook repository than the main one (i.e. integration). Although using git through the command line is supported, you may prefer to manage your Nodebooks via a graphical user interface (GUI). Tortoise Git can provide that.

For MAC users

Source Tree - For managing git, avoiding command line.

Node Training Datasets

We have created test datasets to use for this workshop. Each attendee has their own files, available at this link: http://129.173.48.161/data/repository/node_training/node-training-files-1

Please find the folder with your name and download. Save these somewhere on your computer, and UNZIP all files.

Key Points

Node Manager tasks involve the use of many different programs

OTN staff are always available to help with installation of these programs or any issues

There are many programs and tools to help Node Managers

Data Loading Workflow

Overview

Teaching: 30 min

Exercises: 45 minQuestions

How does a Node Manager receive data?

How does a Node Manager track their To-Do list?

How can a Node Manager interact with their database directly?

Objectives

Understand the data-loading workflow

Understand how to create and use GitLab Issues

Understand how to access and query your database tables

Understand how to use the

AUTH - Create and Updatenotebook to maintain your database credentials file

Data Managers receive data from a researcher and then begin the process of QA/QC and data matching:

- Records are received and a GitLab Issue is created.

- Data are QA/QC’d using the OTN Nodebooks, and all progress is tracked in GitLab. Feedback between Data Manager and researchers happens at this stage, until data is clean and all GitLab tasks are completed.

- Successful processing can be checked by using DBeaver to query and explore the database.

flowchart LR

data_start(( )) --> get_data(Receive metadata </br>from researchers)

style data_start fill:#00FF00,stroke:#00FF00,stroke-width:4px

get_data --> gitlab(Create Gitlab issue </br>with template)

gitlab --> viz{Visually inspect, </br>does metadata have errors?}

viz --yes--> req(Request corrected data </br>from researchers)

req --> end1(( ))

style end1 fill:#FF0000,stroke:#FF0000

viz --no--> run_checklist(Run data through checklist)

run_checklist --> otn_part(Pass to OTN for </br>final verification)

otn_part --> end2(( ))

style end2 fill:#FF0000,stroke:#FF0000

Researcher data submission

There are many ways to receive data from researchers in your community/group. Find and make official the way that works for your community and ensure that way becomes standard practice for reporting to your Node.

File management website

The most common way to receive data and metadata from a researcher is through some type of file management website. This will require either an email notification system for the Node Manager or constant checking to look for new submissions.

OTN-managed Nodes can always use the same Plone file management portal software that OTN itself uses to create and maintain private-access data repository folders into which researchers can deposit their data and metadata. These private folders also serve as the location where Detection Extracts are distributed to users, when available.

The FACT Network currently uses a custom instance of Research Workspace for the same purpose.

The ACT and GLATOS Networks use a custom data-submission form managed through their networks’ web sites.

Its common for groups of researchers to use DropBox, Google Drive, or something similar to share data/metadata when the Network is still small. This can be a great, accessible option but the caveat is that is is much more difficult to control access to each individual folder to protect the Data Policy, and it may be difficult to determine when new data has been submitted.

Email-only submission

Generally, each Node Manager has an email address for communicating with their network’s data submitters (ex: Data @ TheFACTNetwork . org). This is a great way to ensure all Node-related emails are contained in the same account in the case of multiple Node Managers or the succession of a new Node Manager. With proper email management, this can be a very successful way to ask Node users to submit their data/metadata to your Node.

It is not recommended to use a personal email account for this, since all the files and history of the project’s data submissions will be lost if that Manager ever moves away from the role. If the account is hosted at an institution, it may be advisable to submit requests to raise institutional limits on constraints like email storage in advance.

Documenting data submission

Using one of the suggested means above, a user has submitted data and metadata to the Node Manager. Now what?

OTN uses GitLab Issues with templates of task-lists to ensure we NEVER forget a step in data loading, and that no file is ever lost/forgotten in an inbox.

Immediately upon receipt of a data file, you are advised to login to OTN’s GitLab. You will have a project for your Node named

Once on the GitLab project page, you should navigate to the Issues menu option, on the left side. Think of your GitLab issues as your running “TODO List”! You will want to create a new Issue for each piece of data that is submitted.

NOTE: GitLab Issues are often referred to as “tickets”

Creating GitLab issues

By choosing the New Issue button in the top-right of your screen, you will be taken to a new, blank, issue form. You will need to fill out the following fields:

- Title: Write the project name/code, the type of data submitted, and the submission date, this makes the ticket searchable in the future (eg:

HFX tag metadata 2022-02) - Type: Should be type

Issue. - Description:

- There are pre-made Templates to choose from here, using the drop down menu. Ensure you choose the relevant checklist for the type of data that was submitted (eg:

Tag_metadata). This will populate the large description field! - Ensure you include the link to the submitted data file OR use the

Attach a fileoption to attach a copy of the submitted data file to the issue.

- There are pre-made Templates to choose from here, using the drop down menu. Ensure you choose the relevant checklist for the type of data that was submitted (eg:

- Assignee: Assign to yourself if this is a task for you, or to anyone else to whom you want to delegate.

- Milestone: These are the upcoming Data Push dates. You should choose the nearest future PUSH date as the Milestone for this issue.

- Labels: This is for your reference - choose a label that will help you remember what stage of processing this issue is in. Some common examples include

Needs QC,Waiting for Metadata,Waiting for VRLs,Request PI Clarificationetc. You can create new labels at any time to help sort your tickets.

With the above information supplied, you can click the Create Issue button.

Using GitLab to track progress

As you approach the deadline for data-loading, before a data PUSH, you should begin to work on your Issues which fall under that Milestone. When you open an issue, you will be able to see the remaining tasks to properly load/process that data along with the name of the OTN Nodebook you should use to complete each task.

Keep GitLab open in your browser as you work through the relevant Nodebooks. You should check off the tasks as you complete them, and insert any comments you have into the bottom of the ticket. Comments can include error messages from the Nodebook, questions you have for the researcher, any re-formatting required, etc. At any time you can change the Labels on the issue, to help you remember the issue’s status at a glance.

Once you are done for the day, you’ll be able to come back and see exactly where you left off, thanks to the checklist!

You can tag anyone from the OTN Data Team in your GitLab issue (using the @NAME syntax). We will be notified via email to come and check out the Issue and answer any questions that have been commented.

Once you have completed all the tasks in the template, you can edit the Assignee value in the top-right corner, and assign to someone from OTN’s Database team (currently, Angela or Yinghuan). They will complete the final verification of the data, and close the issue when completed. At this time, you can change the issue Label to Verify, or something similar, to help visually “mark it off” your issue list on the main page.

GitLab practice

At this time we will take a moment to practice making GitLab Issues, and explore other pages on our GitLab like, Milestones, Repository, Snippets, and Wiki.

Database access

As part of the OTN workflow, it may be prudent to use a database client like DBeaver to view the contents of your Node’s database directly and make sure the data has been loaded as expected.

DBeaver is an open-source application for interacting directly with databases. There are lots of built-in tools for query writing and data exploration. We will assume that workshop attendees are novices in using this application.

Connecting to your database

For this training we will connect to a Node Training test database, as practice. Once you open DBeaver, you will need to click on the Database menu item, and choose New Database Connection. A popup will appear, and you will choose the PostreSQL logo (the elephant) then click Next. Using the .auth file provided to you by OTNDC you will complete the following fields:

- Host: this could be something like

matos.asascience.comfor your DB, but we will use the IP address:129.173.48.161for our Node Training DB. - Database: this will be your database name, something like

pathnode. For training, it will benodetraining. - Port: this is specified in your

.authfile and will be four digits. For training, this port will be set to5432. - Username/Password: your personal username and password. For training, your username will be your first initial plus last name (ex:

jsmith). Your password will be this username backwards.

Next, choose Test Connection and see if it passes the tests. If so, choose Finish and you’re now connected to your database!

On the left-side you should now see a Database Navigator tab, and a list of all your active database connections. You can use the drop down menu to explore all the schemas aka: collections stored in your database. You can even view each individual table, to confirm the creation steps in the Nodebooks were successful.

Writing a query in DBeaver

If you wish to write a query to see a specific portion of your already-loaded data, you should first open a new SQL console. Choose SQL Editor from the top menu, then New SQL Script. A blank form should appear.

While writing SQL is out of the scope of this course, there are many great SQL resources available online. The general premise involves creating conditional select statements to specify the data you’re interested in. As an example, select * from hfx.rcvr_locations where rcv_serial_no = '12345'; will select all records from the HFX schema’s rcvr_locations table where the serial number is 12345.

To run a query, ensure your cursor (the vertical line that shows where you are editing text) is on the line you want to run, then either 1) right-click, and choose Execute, or 2) press CTRL-ENTER (CMD-ENTER for Mac). The results of your query will be displayed in the window below the SQL console.

OTN is here to support you as you begin to experiment with SQL queries and the OTN database structure, and can help you build a library of helpful custom queries that you may want or need.

Exercise: Database Querying

Let’s take a moment to explore some of the tables in the Node Training database, and write some example SQL queries.

Key Points

Node-members cannot access the database, you are the liason

Data submissions and QC processes should be trackable and archived

OTN is always here to help with any step of the process

Connecting to the Database from the Nodebooks

Overview

Teaching: 15 min

Exercises: 15 minQuestions

How can a Node Manager connect with their database in the Nodebooks?

How can a Node Manager connect with other Nodes for matching?

How can a Node Manager use Gitlab Automation in the Database Fix Nodebooks?

Objectives

Understand how to use the

AUTH - Create and Updatenotebook to maintain your database credentials file

Connecting to your Database from the Nodebooks

Now that we have explored and set up some of the tools needed to work as a Node Manager, we can begin preparing our Nodebook connection files. To enhance security, OTN uses encrypted, password-protected .kdbx files to store login credentials for your database. To create these, we have developed an interactive AUTH - Create and Update Nodebook.

- Open the OTN Nodebooks

- Open your terminal, and navigate to your ipython-utilities directory, using

cd /path/to/ipython-utilities.Then, run the commands:conda activate nodebookto activate the nodebook python environmentjupyter notebook --config="nb_config.py" "0. Home.ipynb"to open the Nodebooks in a browser window.

- Open your terminal, and navigate to your ipython-utilities directory, using

- Your Nodebooks should open in a new browser window, showing the

Homepage. - Open the

AUTH - Create and UpdateNodebook

Imports cell

This section will be common for most Nodebooks: it is a cell at the top of the notebook where you will import any required packages and functions to use throughout the notebook. It must be run first, every time.

Path to file

This cell needs to be edited. Between the quotes you will type the filepath to the .kdbx file you would like to create, or one which already exists that you would like to edit. The format should look like:

file_location = 'C:/Users/path/to/node_auth.kdbx'

Run this cell to save the input.

Create Password

Run this cell. You will be prompted to create a password for the file (if it is a new file) or to enter the existing password if you are accessing an existing file. Ensure that you remember this master password, as you will be using it every time you connect to the database through the Nodebooks.

Create or Update Main Connections

Run this cell. This section will have an editable form. If it is a new file, all fields will be blank. If it is an existing file, the previously-entered information will display. You may now edit the information, pressing the blue button when you are finished to save your results.

- Conn Name: this is customizable - what is the name of this connection? We recommend choosing something like “OTN Database” to help you remember.

- Host: this will be something like

matos.asascience.comfor your DB, but for training purposes we will use the IP of our Node Training DB:129.173.48.161. - Port: this is specified in your

.authfile and will be four digits. Use5432for Node Training. - DB Name: this will be your database name, something like

pathnode. For training, it will benodetraining. - Username/Password: your personal username and password. For training, your username will be your first initial plus last name (ex:

jsmith). Your password will be this username backwards.

Create or Update DBLink Connections

In order to match detections across databases, you will need to establish DBLink connections to other OTN Nodes. This information can be stored in your .kdbx file, and will give you limited access to information required to match detections and create detection extracts.

Run this cell. This section will have an editable form. If it is a new file, all fields will be blank, and you can choose Add Connection. If it is an existing file, the previously-entered information will display for each DBLink Connection you’ve specified. You may now edit the information, pressing the update or save button when you are finished to save your results.

Please contact OTN if you need any of the following information:

- Conn Name: this is customizable - what is the name of this connection? Something like

fact-link-to-migramaris informative. - Host: this will be something like

matos.asascience.comfor the DB you are trying to connect to (i.e. not your own Node db host). - Port: this will be the port required to connect to the remote DB (not your Node db).

- DB Name: this will be the name of the database you are trying to connect to (not your Node db), something like

otnunit. - User/Password: the username and password of the DBLink user for your database. Not your own node admin’s connection information.

Once you have saved your new DBLink connection, you can create another. Continue until you have established connections to all remote Nodes. Currently, you will require DBLinks to 7 Nodes, but this is rapidly expanding.

Test Connections

The next two cells will test the connection information you entered. Success messages will look like this for your main connection:

Auth password:········

Connection Notes: None

Database connection established

Connection Type:postgresql Host:db.your.org Database:your_db User:node_admin Node: Node

and like this for your DBLink connections:

Testing dblink connections:

fact-link-to-Node1: DBLink established on user@db.load.oceantrack.org:5432 - Node: NODE1

fact-link-to-Node2: DBLink established on user@db.load.oceantrack.org:5432 - Node: NODE2

fact-link-to-Node3: DBLink established on user@db.load.oceantrack.org:5432 - Node: NODE3

fact-link-to-Node4: DBLink established on user@db.load.oceantrack.org:5432 - Node: NODE4

fact-link-to-Node5: DBLink established on user@db.load.oceantrack.org:5432 - Node: NODE5

fact-link-to-Node6: DBLink established on user@db.load.oceantrack.org:5432 - Node: NODE6

fact-link-to-Node7: DBLink established on user@db.load.oceantrack.org:5432 - Node: NODE7

You are now able to use the filepath to your .kdbx file to run all the Nodebooks.

Change KDBX password

If you have been passed a template .kdbx file from OTNDC with prefilled information, you should use this section to change the password to ensure your privacy is protected.

You will need to enter:

- Current Password (provided by OTNDC) for your template file

- New Password (of your choosing)

Press Save to change the password of your .kdbx. Ensure that you remember this password, as you will be using it every time you connect to the database through the Nodebooks.

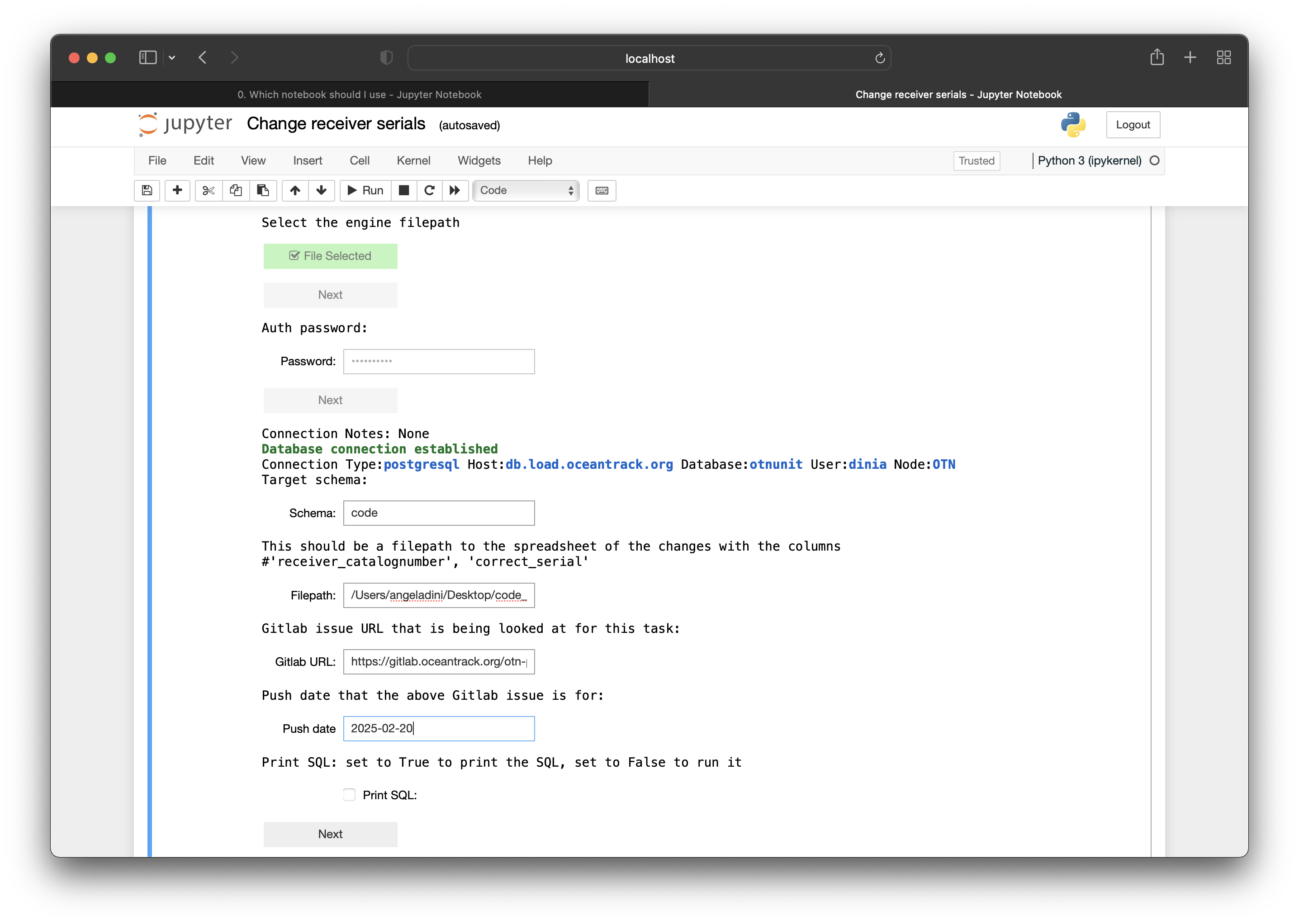

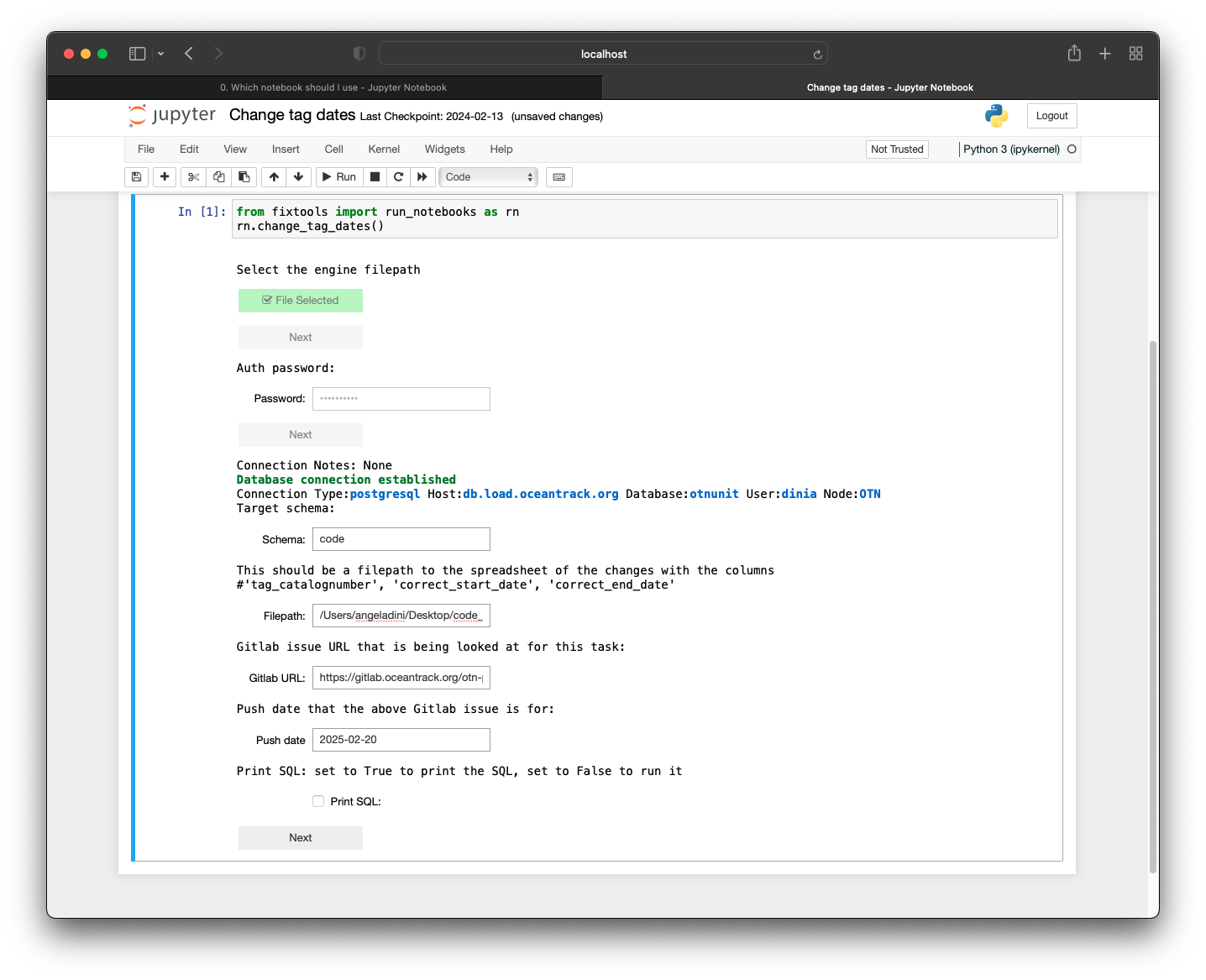

Add a Git Access Token

This will be relevant for users of the Database Fix suite of Nodebooks only. If you are not going to use these tools, you can skip this cell in the Nodebooks.

A Gitlab Access Token will allow Nodebooks to access your GitLab account and insert comments into an Issue directly, as you are working on it. This has been developed for the Database Fix Notebooks to ensure all changes made within the notebooks are documented in GitLab properly. The automation is part of the OTNGitlabAutomation package.

Instructions to create a Personal Access Token are found on our wiki here

You can create one by following the steps below:

- In the top-right corner of gitlab, click your avatar.

- Select Edit profile.

- On the left sidebar, select Access Tokens. Enter a name for your token and optionally set expiry date for the token.

- Under ‘Select scopes’ select ‘api’.

- Select Create personal access token.

- A new token will be created at the top of the page. Make sure you save it somewhere as you won’t be able to access it again. Treat this token like a password as it can be used to access GitLab under your user.

Once you have created your access token, run this cell.

You will need to enter your personal access token.

Press Add Token to insert the token into your .kdbx.

Re-running this cell will allow you to update your access token any time it expires.

Key Points

Node-members cannot access the database, you are the liaison

Data submissions and QC processes should be trackable and archived

OTN is always here to help with any step of the process

Project Metadata

Overview

Teaching: 90 min

Exercises: 0 minQuestions

How do I register a new project in the Database?

Objectives

Understand how to complete the template

Understand how to use the Gitlab checklist

Learn how to use the

Create and Update ProjectsnotebookLearn how to use the

Create Plone folders and add usersnotebook

Process workflow

The process workflow for project metadata is as follows:

flowchart LR

proj_start(( )) --> get_meta(Receive

project metadata

from researchers)

style proj_start fill:#00FF00,stroke:#00FF00,stroke-width:4px

get_meta --> gitlab(Create

Gitlab

issue)

gitlab --> inspect(Visually

inspect)

inspect --> nodebook(QC with

nodebooks)

nodebook --> plone(Verify repository

folder

is correct)

plone --> email(Email project

contacts

onboarding information)

email --> otn(Pass to

OTN)

otn --> end2(( ))

style end2 fill:#FF0000,stroke:#FF0000

The first step when you are contacted by a researcher who wants to register their project with the database is to request Project Metadata. For most Nodes, this is in the form of a plaintext .txt file, using the template provided here. This file allows the researcher to provide information on the core attributes of the project, including the scientific abstract, associated investigators, geospatial details, temporal and taxonomic range.

Completed Metadata

Immediately upon receipt of the metadata, you must create a new Gitlab Issue. Please use the Project Metadata Issue checklist template.

Here is the Issue checklist, for reference:

Project Metadata

- [ ] - NAME add label *'loading records'*

- [ ] - NAME define type of project **select here one of Data, Deployment, Tracker**

- [ ] - NAME create schema and project records (`Creating and Updating project metadata` notebook)

- [ ] - NAME add project contact information (`Creating and Updating project metadata` notebook)

- [ ] - NAME add scientificnames (`Creating and Updating project metadata` notebook)

- [ ] - NAME verify all of above (`Creating and Updating project metadata` notebook)

- [ ] - NAME [Plone-users only] create new project repository users (`Create Plone Folders and Add Users` notebook)

- [ ] - NAME [Plone-users only] create project repository folder (`Create Plone Folders and Add Users` notebook)

- [ ] - NAME [Plone-users only] add project repository users to folder (`Create Plone Folders and Add Users` notebook)

- [ ] - NAME [Plone-users only] access project repository double-check project repository creation and user access

- [ ] - NAME add project metadata file to relevant project folder (Plone site, Research Workspace etc)

- [ ] - NAME email notification of updated metadata file to PI and individual who submitted

- [ ] - NAME send onboarding email to all contacts

- [ ] - NAME label issue with *'Verify'*

- [ ] - NAME pass issue to OTN DAQ staff

- [ ] - NAME [OTN only] manually identify if this is a loan, if so add record to otnunit.obis.loan_tracking (`Creating and Updating project metadata` notebook)

- [ ] - NAME [OTN only] if this is a loan, update links for PMO

- [ ] - NAME pass issue to OTN analyst for final verification

- [ ] - NAME verify project in database

**project metadata txt file**

Visual Inspection

Once the researcher provides the completed file, the Data Manager should complete a visual check for formatting and accuracy.

Please make sure of the following:

- Is the PI-provided collection code unique/appropriate? Do you need to create one yourself? Existing schemas/collection codes can be seen in the database.

- Are there typos in the title or abstract?

- Are the contacts formatted correctly?

- Are the species formatted correctly?

- Does the location make sense based on the abstract, and is it formatted correctly (one per line)?

Often, the Contacts section has been improperly formatted. Pay close attention here.

Below is an example of a properly completed metadata form, for your reference.

===FORM START===

0. Intended/preferred project code, if known? (May be altered by OTNDC)

format: XXXX (3-6 uppercase letters that do not already have a representation in the OTN DB. Will be assigned if left blank)

NSBS

1. Title-style description of the project?

format: < 70 words in 'paper title' form

OTN NS Blue Shark Tracking

2. Brief abstract of the project?

format: < 500 words in 'abstract' form

In the Northwest Atlantic, the Ocean Tracking Network (OTN), in collaboration with Dalhousie University, is using an acoustic telemetry infrastructure to monitor the habitat use, movements, and survival of juvenile blue sharks (Prionace glauca). This infrastructure includes state-of-the-art acoustic receivers and oceanographic monitoring equipment, and autonomous marine vehicles carrying oceanographic sensors and mobile acoustic receivers. Long-life acoustic tags (n=40) implanted in the experimental animals will provide long-term spatial resolution of shark movements and distribution, trans-boundary migrations, site fidelity, and the species’ response to a changing ocean. This study will facilitate interspecific comparisons, documentation of intra- and interspecific interactions, and permit long-term monitoring of this understudied predator in the Northwest Atlantic. The study will also provide basic and necessary information to better inform fisheries managers and policy makers. This is pertinent given the recent formulation of the Canadian Plan of Action for Shark Conservation.

3. Names, affiliations, email addresses, and ORCID (if available) of researchers involved in the project and their role.

The accepted Project Roles are defined as:

Principal Investigator: PI or Co-PI. The person(s) responsible for the overall planning, direction and management of the project.

Technician: Person(s) responsible for preparation, operation and/or maintenance of shipboard, laboratory or deployed scientific instrumentation, but has no invested interest in the data returned by that instrumentation.

Researcher: Person(s) who may use/analyse the data to answer research questions, but is not the project lead. Can be a student if their involvement spans past the completion of an academic degree.

Student: Person(s) researching as part of a project as part of their work towards an academic degree.

Collaborator: A provider of input/support to a project without formal involvement in the project.

Please add 'Point of Contact' to the contact(s) who will be responsible for communicating with OTN.

format: Firstname Lastname, Employer OR Affiliation, Project Role (choose from above list), email.address@url.com, point of contact (if relevant), ORCID

Fred Whoriskey, OTN, principal investigator, fwhoriskey@dal.ca, 0000-0001-7024-3284

Sara Iverson, OTN, principal investigator, sara.iverson@dal.ca

Caitlin Bate, Dal, researcher, caitlin.bate@dal.ca, point of contact

4. Project URL - can be left blank

format: http[s]://yoursite.com

https://members.oceantrack.org/

5. Species being studied?

format: Common name (scientific name)

blue shark (Prionace glauca)

sunfish (Mola mola)

6. Location of the project?

format: (city, state/province OR nearby landmark OR lat/long points in decimal degree), one per line

Halifax, NS

44.19939/-63.24085

7. Start and end dates of the project, if known?

format: YYYY-MM-DD to YYYY-MM-DD ('ongoing' is an acceptable end date)

2013-08-21 to ongoing

8. Citation to use when referencing this project:

format: Lastname, I., Lastname, I. YYYY. [Title from question 1 or suitable alternative] Will be assigned if left blank.

===FORM END===

Quality Control - Create and Update Projects

Each step in the Issue checklist will be discussed here, along with other important notes required to use the Nodebooks.

Imports Cell

This section will be common for most Nodebooks: it is a cell at the top of the notebook where you will import any required packages and functions to use throughout the notebook. It must be run first, every time.

You will have to edit one section: engine = get_engine()

- Within the open brackets you need to paste the path to your database

.kdbxfile which contains your login credentials. Ensure that the path is enclosed in quotation marks. - On MacOS computers, you can usually find and copy the path to your database

.kdbxfile by right-clicking on the file and holding down the “option” key. On Windows, we recommend using the installed software Path Copy Copy, so you can copy a unix-style path by right-clicking. - The path should look like

engine = get_engine('C:/Users/username/Desktop/Auth files/database_connection.kdbx').

Project Metadata Parser

This cell is where you input the information contained in the Project Metadata .txt file. There are two ways to do this:

- You can paste in the filepath to the saved

.txt. You should ensure the formatting follows this example:project_template_path = '/path/to/project_metadata.txt' - You can paste the entire contents of the file, from

===FORM START=== to ===FORM END===inclusive, into the provided triple quotation marks.

Note that each of the above options is contained in its own cell in the notebook, and you only need to do one of the two.

Once either option is selected, you can run the cell to complete the quality control checks.

The output will have useful information:

- Are there strange characters in the collection code, project title, or abstract?

- Were the names and affiliations of each contact successfully parsed? Are there any affiliated institutions which are not found? Are there any contacts which were not found that you expected to be?

- Is the project URL formatted correctly?

- Are all the species studied found in WoRMS? Are any of them non-accepted taxonomy (entries with accepted taxonomies will have a success message of the format

INFO: Genus species is an accepted taxon, and has Aphia ID XXXXXX., followed by a URL)? Which ones have common names that do not match the WoRMS records (look at bottom of each species record for success:OK: Animal name is an acceptable vernacular name for Genus species)? NOTE: any mismatches with common name can be fixed at a later stage, make a note in the Issue for your records - Is the suggested Bounding Box appropriate based on the abstract? NOTE: any issues with the scale of the bounding box can be fixed at a later stage, make a note in the Issue for your records

- Are the start and end dates formatted correctly?

Generally, most of the error messages arise from the Contacts and Species sections.

If any information does not parse correctly, you should fix it in the source file and re-run the cell until you are happy with the output.

Manual Fields - Dropdown Menu

There are some fields which need to be set up by the Data Manager, rather than the researcher. These are in the next cell.

Run the cell to generate a fillable form with these fields:

- Node: select your node

- Collaboration Type: based on the abstract, are they deploying only tags (

Trackerproject), only receivers (Deploymentproject) or both tags and receivers (Dataproject)? - Ocean: choose the most appropriate ocean region based on the abstract.

- Shortname: usually a summarised version of the project title, which will be used as the name of the Data Portal folder. ex:

OTN Blue Sharks. - Longname: use the Title provided by the researcher, or something else, which is in “scientific-paper” style. ex:

Understanding the movements of Blue sharks through Nova Scotia waters, using acoustic telemetry. - Series Code: this will generally be the name of your node. Compare to values found in the database

obis.otn_resourcesif you’re unsure. - Institution Code: The main institution responsible for maintaining the project. Compare to values found in the database

obis.institution_codesandobis.otn_resourcesif you’re unsure. If this is a new Institution, please make a note in the Issue, so you can add it later on - Country: based upon the abstract. Multiple countries can be listed as such:

CANADA, USA, EGYPTetc. - State: based upon the abstract. Multiple states can be listed as such:

NOVA SCOTIA, NEWFOUNDLANDetc. - Local Area: based upon the abstract. Location information. ex:

Halifax - Locality: based upon the abstract. Finest-scale of location. ex:

Shubenacadie River - Status: is the project completed, ongoing or something else?

To save and parse your inputted values DO NOT re-run the cell - this will clear all your input. Instead, the next cell is the one which needs to be run to parse the information.

Verify the output from the parser cell, looking for several things:

- Are all the fields marked with a green

OK? - Is the following feedback included in the institution code section:

Found institution record for XXX in your database:followed by a small, embedded table?

If anything is wrong, please begin again from the Manual Field input cell.

If the institution code IS NOT found - compare to values found in the database obis.institution_codes and obis.otn_resources. If this is a new Institution, please make a note in the Issue, so you can add it later on

Task List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME define type of project **select here one of Data, Deployment, Tracker**

Please edit to include the selected project type, in harmony with the selected field in the Nodebook.

Verifying Correct Information

At this stage, we have parsed all the information that we need in order to register the project in the database. There is now a cell that will print out every saved value for your review.

You are looking for:

- Typos

- Strange characters

- Correct information based on abstract

If this information is satisfactory, you can proceed.

Adjust Bounding Box

The cell titled Verify the new project details before writing to the DB is the final step for verification before the values are written to the database. At this stage, a map will appear with the proposed Bounding Box for the project.

Based on the abstract, you can use the Square Draw Tool to re-draw the bounding box until you are happy with it.

Once you are happy, you can run the next cell in order to save your bounding adjustments. The success output should be formatted like this:

--- Midpoint ---

Latitude:

Longitude:

Create New Institution

STOP - confirm there is no Push currently ongoing. If a Push is ongoing, you must wait for it to be completed before processing beyond this point.

Remember above, where we noted whether the provided institution was new or existed on obis.institution_codes? This cell is our opportunity to add any new institutions. If all institutions (for each contact, plus for the project as a whole) exist, then you can skip this cell.

To run the cell, you will need to complete:

- Institution Code: a short-code for the institution (ex: DAL)

- Institution Name: the full institution name (ex: Dalhousie University)

- Institution Website: the institution’s website (ex: https://www.dal.ca/). You can confirm the URL is valid with the

Check URLbutton. - Organization ID: Press the

Search Org IDbutton. A list of potential WikiData, GRID, ROR, EDMO and NERC results for the institution will appear. Choose the best match, and paste that URL into the blank Organization ID cell. - Institution Country: the country where the institution is headquartered

- Institution State: the state where the institution is headquartered

- Institution Sector: one of Unknown, Government/Other Public, University/College/Research Hospital, Private, or Non-profit.

Once all values are completed, press Create Institution and confirm the following output:

Institution record 'DAL' created.

You can re-run this cell as many times as you need, to add each missing institution.

Write Project to the Database

STOP - confirm there is no Push currently ongoing. If a Push is ongoing, you must wait for it to be completed before processing beyond this point.

Finally, it is time to write the project records to the database and create the new project!

First, you should run this cell with printSQL = True. This will run the code, but print the SQL query instead of running it against the database. This enables you to do a dry run and make sure everything is in order before you register your project. If there are no errors, you can edit the cell to read printSQL = False and run again. This will register the project!

You will see some output - confirm each line is accompanied by a green OK.

Task List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME create schema and project records ("Creating and Updating project metadata" notebook)

Save Contact Information

At this stage, the next step is to add contact information for all identified collaborators.

This cell will gather and print out all contacts and their information. Review for errors. If none exist, move to the next cell.

The next cell writes to the database: STOP - confirm there is no Push currently ongoing. This cell will add each contact to the database, into the obis.contacts and obis.contacts_projects tables, as needed.

Valid output will be of this format:

Valid contact: Fred Whoriskey OTN principalInvestigator fwhoriskey@dal.ca

Created contact Fred Whoriskey

Task List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME add project contact information ("Creating and Updating project metadata" notebook)

Add Species Information

The following section will allow you to add the required information into the obis.scientific_names table.

The first cell imports the required function.

The second cell, when run, will create an editable input form for each animal.

You should review to confirm the following:

- the scientific name matches an accepted WoRMS taxon. There should be a URL provided and no error messages.

- the common name is acceptable. If it is not, you can choose a value from the dropdown menu (taken directly from WoRMS’

vernacularlist) OR you can enter a custom value to match the common name provided by the researcher.

Once you are sure that both the scientific and common names are correct, based on the information provided by both the project and the notebook, you may click the Add to project button for each animal record you’d like to insert.

There will be a confirmation display in the notebook to demonstrate if the insertion was successful.

Task List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME add scientificnames ("Creating and Updating project metadata" notebook)

OPTIONAL: Add Project Loan Information

The following section is used by OTN staff to track projects which are recipients of OTN-equipment loans. This section is not within the scope of this Node Manager Training, because it requires a login file for the

otnunitdatabase.

Skip to Verification

Once you scroll past the Project Loan Information section, you will see a yellow star and the words Skip to the new project Verification. You should click the button provided, which will help you scroll to the bottom of the notebook, where the Verify section is located.

This is a chance to visually review all the fields you just entered. You should run these cells and review all output to ensure the database values align with the intended insertions.

Task List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME verify all of above ("Creating and Updating project metadata" notebook)

Other Features of this Notebook

There are more features in the Create and Update Projects Nodebook than those covered above.

Schema Extension

This section is to be used when you have a project which is either Tracker or Deployment and is expanding to become a Data project. Ex: a project which was only tagging has begun deploying receivers.

You can use this Nodebook to create the missing tables for the schema. Ex: if a tagging project begins deploying receivers, the schema would now need stations, rcvr_locations, and moorings tables created.

Schema Updating

This section is to be used to change the values contained in obis.otn_resources. The first cell will open an editable form with the existing database values. You can change the required fields (ex: abstract).

To save and parse your inputted values DO NOT re-run the cell - this will clear all your input. Instead, the next cell is the one which needs to be run to parse the information.

Review the output of the parser cell and check for typos.

The next cell will show the changes that will be made to the project data. You can copy this output and paste it into the relevant Gitlab Issue for tracking.

The final cell will make the desired changes in the database. Ensure printSQL = False if you want the cell to execute directly.

Successful output will be of this format:

'Resource record for HFX has been updated.'

The following highlighted section is relevant only to Nodes who use Plone for their document management system

Quality Control - Create Plone Users and Access

If you are part of a Node that uses Plone as your document repository, then the following will be relevant for you.

Imports Cell

This section will be common for most Nodebooks: it is a cell at the top of the notebook where you will import any required packages and functions to use throughout the notebook. It must be run first, every time.

You will have to edit one section:

engine = get_engine()

- Within the open brackets you need to open quotations and paste the path to your database

.kdbxfile which contains your login credentials.- On MacOS computers, you can usually find and copy the path to your database

.kdbxfile by right-clicking on the file and holding down the “option” key. On Windows, we recommend using the installed software Path Copy Copy, so you can copy a unix-style path by right-clicking.- The path should look like

engine = get_engine(‘C:/Users/username/Desktop/Auth files/database_connection.kdbx’).Plone Login

The first cell is another import step.

The second cell requires input:

- Proper Plone log-in information must be written in the

plone_auth = get_plone_auth('./plonetools/plone_auth.json')file.- In order to do this, click on the

Jupytericon in the top left corner of the page.- This will bring you to a list of folders and notebooks. Select the

plonetoolsfolder. From there, select theplone_auth.jsonfile and input your Plone base URL, username, and password. Please ensure the base_url in your json file ends in a slash, likehttps://members.oceantrack.org/!- You can now successfully log into Plone.

Now, when you run the cell, you should get the following output:

Auth Loaded: ------------------------------------------------------------------------------ base_url: https://members.oceantrack.org/ user_name: user verify ssl: FalseFinally, the third cell in this section will allow you to login. You should see this message:

Login Successful!Access Project Information

Some information is needed in order to create the project Plone folders.

There are three ways to enter this information:

- Access Project Information from Database

- Manual Project Information Form - Parse Contacts

- Manual Project Information Form - Insert Contacts into Textfields

The first option is generally the easiest, if the project has already been successfully written to the database using the

Create and Update ProjectsNodebook. To do this, you enter thecollectioncodeof your project, and run the cell. If there are no errors, you can click theSKIPbutton which will take you down the Nodebook to the next section.Create Missing Users

This section will use the registered project contacts and compare against existing Plone users. It will compare by email, fullname, and lastname.

If a user is found, you will not need to create a new account for them.

If a user is not found, you will have to create an account for them. To do this, you can use the editable form in the next cell.

The editable cell will allow you to choose each contact that you’d like to register, and will autofill the information (including a suggested username). The password should be left blank. Once you are happy with the form, click

Add User. An email will be sent to the new user, prompting them to set a password. Then you can repeat by selecting the next contact, etc.Once all contacts have Plone accounts (new or otherwise) you are finished.

Task List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME create new project repository users ("Create Plone Folders and Add Users" notebook)Create Project Repository

To create the project folder you must first enter the relevant Node information:

- otnunit:

node = None- safnode, migramar, nepunit:

node = "node"- lowercase with quotation marks, fill in the value based on the path in Plone.- all other nodes (not hosted by OTN):

node = NoneRunning this cell will print out an example of the URL, for your confirmation. Ensure the

collectioncodeandNodeare correct.The expected format:

https://members.oceantrack.org/data/repository/node_name/collectioncode(node name is for SAF, MigraMar, and NEP only)If you are confident the folder path is correct, you can run the next cell and confirm the following success message:

Creating collection folder 'collectioncode'. Done! https://members.oceantrack.org/data/repository/node_name/collectioncodeTask List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME create project repository folder ("Create Plone Folders and Add Users" notebook)Add Users to Repository

Now that the users AND folder have been created, the users must be given access to the new folder.

Using the final cell in the

Plone repository folder creationsection, you will be provided with an editable search-bar.Type in the Plone username of each contact (new and existing). Search results will appear:

- Select the User who you would like to add

- Choose their permissions

- Click “Change repo permissions” to add them to the folder.

Review for the following success message:

Changed https://members.oceantrack.org/data/repository/node_name/collectioncode sharing for username: Contributor=True Reviewer=True Editor=True Reader=TrueThen you may choose

Add another userand begin again.The acceptable folder permissions may vary depending on the project role of the contact. Here are some guidelines:

- Principal Investigator: all permissions

- Researcher: all permissions except

Reviewer- Student: all permissions except

Reviewer- Technician: only

ContributorandReader- Collaborator: only

ContributorandReaderThis is very fluid and can be edited at any time. These are guidelines only!

Task List Checkpoint

In Gitlab, this task can be completed at this stage:

- [ ] - NAME add project repository users to folder ("Create Plone Folders and Add Users" notebook)OPTIONAL: Add Project Loan Information

The following section is used by OTN staff to track projects which are recipients of OTN-equipment loans. This section is not within the scope of this Node Manager Training, because it requires a login-file for the

otnunitdatabase.

Final Steps

The remaining steps in the Gitlab Checklist are completed outside the Nodebooks.

First: you should access the created Repository folder in your browser and confirm if the title and sharing information is correct. If so, add the project metadata .txt file into the “Data and Metadata” folder to archive.

Next, you should send an email to the project contacts letting them know their project code and other onboarding information. Please note that OTN has a template we use for our onboarding emails. It is recommended that you create a template for your Node which includes relevant reporting instructions.

Finally, the GitLab ticket can be assigned to an OTN analyst for final verification in the database.

Key Points

Loading project metadata requires subjective decisions and judgement by the Data Manager

Loading project metadata is a required first step towards managing a project’s data in the Node

Tagging Metadata

Overview

Teaching: 90 min

Exercises: 0 minQuestions

How do I load new tags into the Database?

Objectives

Understand the proper template-completion

Understand how to use the Gitlab checklist

Learn how to use the

Tag-1notebookLearn how to use the

Tag-1bnotebookLearn how to use the

Tag-2notebook

Process workflow

The process workflow for tag metadata is as follows:

flowchart LR

tag_start(( )) --> get_meta(Receive

tag metadata

from researchers)

style tag_start fill:#00FF00,stroke:#00FF00,stroke-width:4px

get_meta --> gitlab(Create

Gitlab

issue)

gitlab --> inspect(Visually

inspect)

inspect --> nodebook(Process and verify

with nodebooks)

nodebook --> plone(Add metadata

to repository folder)

plone --> otn(Pass to

OTN)

otn --> end2(( ))

style end2 fill:#FF0000,stroke:#FF0000

Once a project has been registered, the next step (for Tracker and Data project types) is to begin to quality control and load the project’s tagging metadata into the database. Tagging metadata should be reported to your Node in the template provided here. This file holds information about the deployment of any and all tags (acoustic, PIT, satellite, floy etc.) in or on animals for the purposes of tracking their movements using either listening stations or via mark/recapture. Any biological metrics that were measured at tagging time, i.e. length, weight, population, are also able to be recorded for association with the tagging event, permitting future analyses.

Recall that there are multiple levels of data tables in the database for tagging records: raw tables (“raw”), cache tables (“intermediate”) and otn tables (“upper”). The process for loading tagging metadata evaluates and promotes the data through each of these levels, as reflected by the GitLab task list.

Completed Metadata

Immediately, upon receipt of the metadata, create a new GitLab issue. Please use the Tag Metadata Issue checklist template.

Here is the Issue checklist, for reference:

Tag Meta Data

- [ ] - NAME add label *'loading records'*

- [ ] - NAME load raw tag metadata (`tag-1` notebook) **put_table_name_in_ticket**